Ollama and Open WebUI

My graphics card is a P106-100 with 6GB of VRAM, my RAM is 64GB, and my CPU is quite old.

Therefore, I’m looking for both a suitable local large language model and a solution that works with limited VRAM.

I used Docker Compose to deploy Ollama and Open WebUI, and the deployment was successful, with the system correctly utilizing my local GPU.

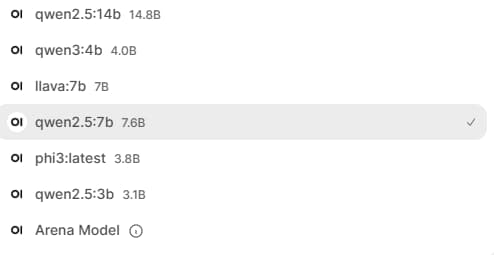

Through actual testing, the local models qwen2.5:3b and qwen2.5:7b work smoothly for conversations, but qwen3:4b and qwen2.5:12b do not work properly.

qwen3:4b has a problem where the GPU usage remains at 90%, even when not in use.

qwen2.5:12b requires more VRAM; 6GB is insufficient. The response speed is too slow, replying one word at a time, making it unusable.

I also tried other models, but currently, qwen2.5:7b is the best fit for my computer’s configuration.

Ollama Windows client

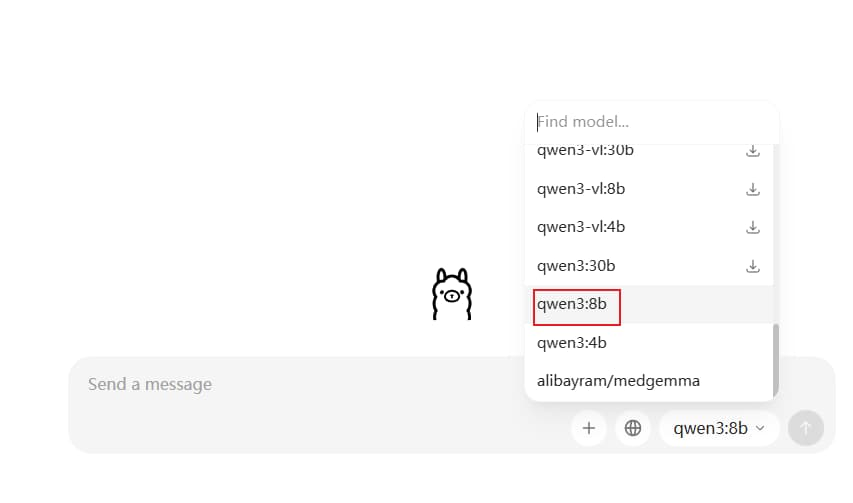

Ollama has its own client, which operates differently from the Docker deployment and also differs from the Ollama.cpp method.

Ollama.cpp is primarily a command-line backend, with relatively complex configuration, but it offers the fastest performance.

I tested qweb3:4b and qweb3:8b with the Ollama Windows client. It doesn’t have the 90% GPU memory usage issue seen with the Docker deployment; instead, the CPU is the main processing unit, and the GPU only dynamically utilizes about 35% of its capacity. With the client, qweb3:8b runs smoothly.

Currently, I don’t plan to test the Ollama.cpp method because it’s more complex, and the performance improvement isn’t significant. Instead of extreme optimization, buying a graphics card with more VRAM is a more practical solution.

Local AI large language model

Currently, I only plan to use it primarily for translating and modifying YouTube titles, suitable for SEO.

I won’t be using other functions much for now, as there aren’t many use cases, but I’m specifically testing them as a backup to ensure I have offline alternatives like ChatGPT in case of internet outages, and to avoid any privacy or security issues.

For other YouTube-related tasks, I will also try to find local AI solutions, such as subtitle extraction, AI voiceover, background removal from thumbnails, and vocal removal.